Probability distribution from an image

- Removed 2d solutions: 02.11.2008

- Original text: 30.10.2008

Problem

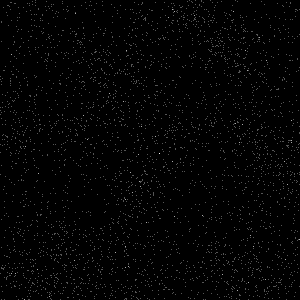

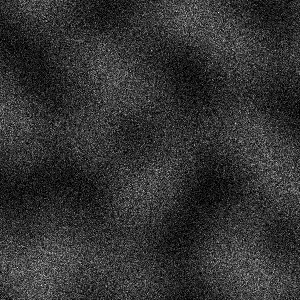

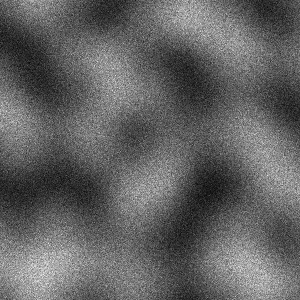

The problem is to generate random pixel positions from a probability distribution described by a grayscale image. To visualize the generated pixels we add a constant amount of intensity to the generated position. In the end we rescale the resulting image back to [0, 1] range. Using such a visualization, a correct generation algorithm should convergence towards the original image (or a multiple of it) as the number of generated pixels increases.

Solution

S = an array of 3-tuples (x, y, value), empty at start

cumulativeValue = 0

for each pixel (x, y)

if image(x, y) > 0

cumulativeValue += image(x, y)

append (x, y, cumulativeValue) to S

repeat desired amount of times

randomValue = random value in [0, cumulativeValue]

pick the first element s of S that has s.value >= randomValue [*]

output s

[*] Since S is an array ordered by ‘value’, we can use a binary search to find the element in logarithmic time.

Results

For 10 000 000 points, the algorithm took 10 seconds on my machine.

Notes

I also tried different approaches to this problem which worked in 2d. They worked by subdividing the image hierarchically down to a single pixel. However, these approaches gave worse results since they needed log(n) + log(m) = log(nm) random variables per point compared to one random variable for the presented technique. After going through the implementation of these algorithms I am pretty sure that the presented technique is near optimal in practical terms.